AI in recruitment is either the most powerful tool we’ve ever had…or the fastest way to a serious lawsuit. If you work in this space, you know that finding the right person for a role can be a time-consuming process. From sifting through stacks of resumes to conducting interviews and following up with references, talent acquisition has always been a lengthy, human-driven process. But is that era of hiring over?

AI is no longer a futuristic concept in the hiring process. It’s rapidly becoming a core part of how recruitment is done today.

According to LinkedIn’s ‘Future of Recruiting 2025’ report, 73% of talent acquisition (TA) professionals agree that AI will change the way organizations hire. And it’s not just about efficiency. 61% think AI can improve how they measure the quality of hire, offering more insight into long-term performance and fit.

However, not all AI is created equal, and not all companies are using it effectively. Workday has become the headline example of what happens when AI in recruitment goes wrong. The company is now facing a class action lawsuit alleging its screening tools discriminated against certain kinds of job applicants (such as those aged 40 years old or older).

The case is growing, and it’s drawing attention to a critical issue HR leaders can no longer ignore - when AI makes decisions about people without proper oversight, it doesn’t just scale efficiency, it scales bias.

At the same time, companies like Humane are showing what responsible, people-centered AI can look like in recruitment… and the difference couldn’t be clearer.

So, let’s explore how both companies have used AI in recruitment and unveil some of the valuable lessons we can take away from them.

Topics covered:

- Workday case study

- The cost of misusing AI in HR recruitment

- How Humane uses AI in recruitment

- Why Humane’s approach works

- Benefits of AI in recruitment

- How to use AI in hiring

- Famous examples of AI bias

- How to prevent AI bias in hiring

- How to measure the success of AI in hiring

Workday: A case study of what can go wrong

The lawsuit claims that Workday’s AI system unfairly discriminated against older job seekers. It filtered them out of job opportunities without clear reasons or ways to appeal.

The lawsuit involves four individuals, who, according to Inc., argue the Workday algorithm “almost immediately rejected their candidacies for vacancies because they were 40 years old or older.”

Workday rejects these claims and denies using an app that filters out applications based on professional or personal characteristics designated as unwanted.

This case underscores a growing risk in HR tech:

- Opaque algorithms that don’t explain their decisions.

- Bias baked into training data.

- Lack of governance or human review.

- Vendors operating as black boxes.

When left unchecked, this kind of automation doesn’t just streamline, it alienates, excludes, and harms.

So, how does this type of discrimination happen?

Well, it’s simple. The AI algorithm learns from past data. If that data includes biased outcomes (like hiring fewer older candidates), the AI simply mirrors and magnifies it.

Whether a company intentionally means to discriminate or not, AI could make hiring decisions based on years of human bias.

The result?

Organizations that unintentionally build exclusion into the core of their hiring process at scale, with less visibility than ever. The question for HR isn’t whether we should use AI - it’s how, and with what guardrails.

The cost of misusing AI in HR recruitment

When we let an AI system make hiring decisions without checking how it works, we risk more than bad hires. We risk:

- Legal exposure

- Reputation damage

- Broken trust between candidates and employees

Most importantly, we lose the very thing HR is supposed to safeguard: fairness, equity, and humanity in the workplace.

How Humane uses AI in recruitment (the right way to do it)

While Workday’s story is a cautionary tale, Humane provides a compelling model of what thoughtful AI implementation in recruitment can look like.

At Humane, AI doesn’t make hiring decisions. It augments the process to make it faster, more consistent, and more collaborative while keeping humans in the driver’s seat.

Humane uses an internal AI system called Nebula to turn recruitment meetings (among other things) into action instantly. For example, their AI system can:

- Transcribe the conversation in real-time.

- Describe the context and history of the team, role, and org structure.

- Create a complete, tailored job description that’s generated in under a minute.

- Automatically select the interview panel based on relevance and past data.

Here’s Humane’s Chief People Officer, José Benitez Cong, talking about the process in more detail:

Why Humane’s approach works

So why does this work, when Workday’s approach seems to have failed?

Because Humane is doing three things right:

- It preserves human judgment - AI doesn’t decide who gets hired. It removes friction, not responsibility.

- It uses context-aware training - The system is fed internal, role-specific, team-aligned data, not generic job market data that could introduce bias.

- It’s built to improve the experience, not just efficiency - With more time saved on admin, recruiters and people teams invest in building relationships, improving communication, and delivering a candidate journey that reflects the company’s values.

“It’s buying us time that we used to waste… and allowing us to create a better experience — for candidates, for employees, and each other.” – José Benitez Cong, Chief People Officer, Humane.

What HR leaders are asking next

A deeper dive into the big questions behind AI in recruitment.

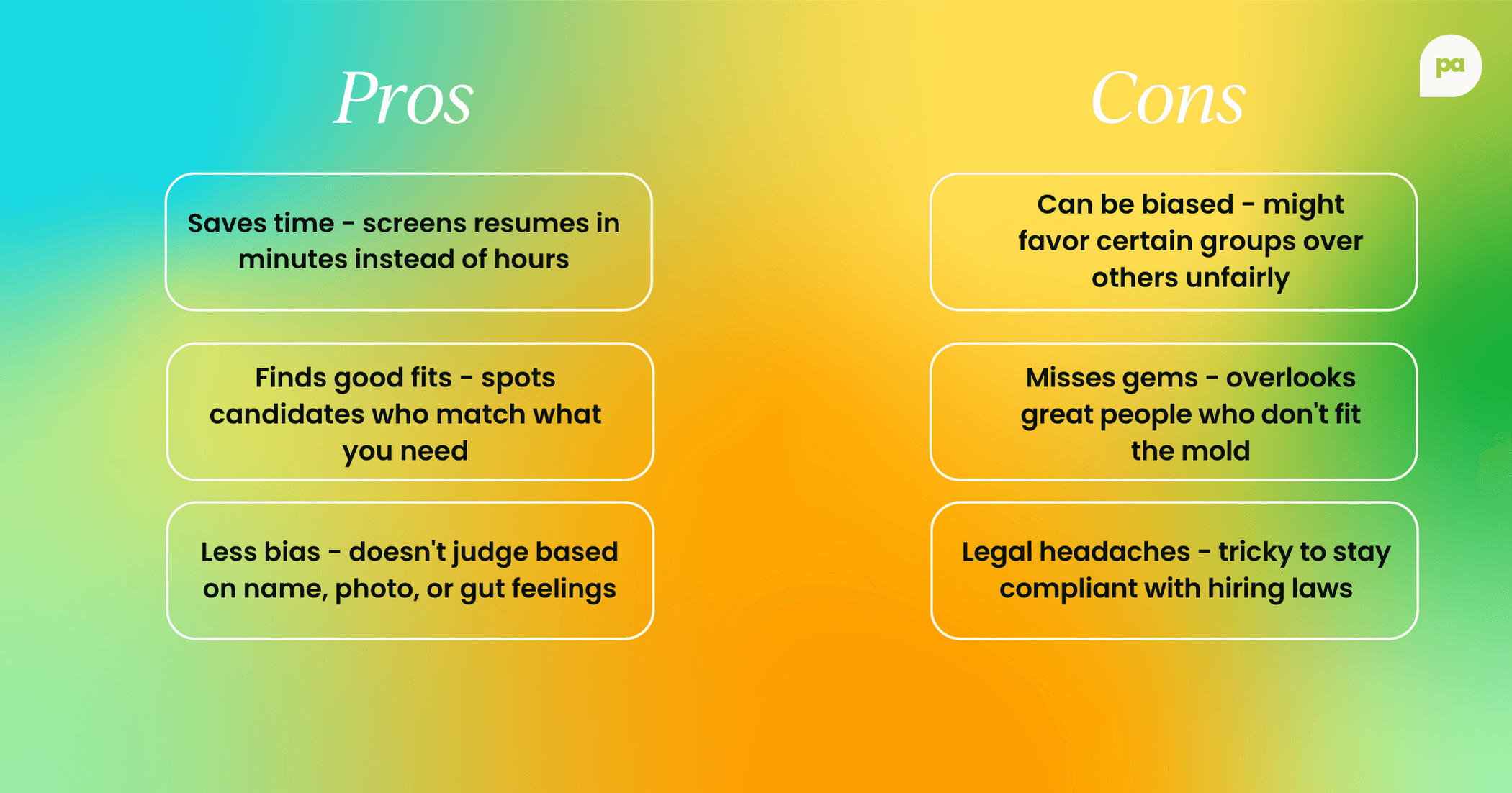

Benefits of AI in recruitment

AI in hiring streamlines the recruitment process by automating time-consuming tasks like resume screening, interview scheduling, and candidate matching. It helps teams hire faster, reduce costs, improve the quality of hires, and deliver a more consistent and engaging candidate experience. When done right, AI doesn't replace human judgment, it just makes it more powerful.

Here’s a snapshot of the biggest benefits of using AI in recruitment and the stats behind them:

🔶 70% of TA pros agree that using AI in hiring has improved the overall efficiency of the process. (Source)

🔶 47% agree that AI has helped them boost the effectiveness of their job posts. (Source)

🔶 Studies have shown that AI screening tools can help decrease the time-to-hire by 50% and improve the quality of hires by 40%. (Source)

🔶 AI-powered recruitment tools can reduce bias by 40%. (Source)

🔶 AI can improve the precision of hiring predictions by over 50%. (Source)

🔶 Companies leveraging AI in their hiring process report reducing recruitment costs by as much as 30 to 40%. (Source)

How to use AI in hiring

AI is transforming the hiring process and while it won’t replace the human touch entirely, it’s becoming an indispensable tool for modern HR teams. So, let’s look into some AI in recruitment examples:

1. Resume screening

AI can quickly scan resumes for important keywords, experience, and qualifications. This saves time compared to sifting through a stack of applications.

The system can rank candidates by how well they fit the job requirements. This helps you focus on the best applicants right away. It’s no surprise that three out of four recruiters say AI tools accelerate the hiring process by enabling faster resume screening.

2. AI-powered chatbots

Some companies are using AI chatbots to handle initial candidate interactions, answering basic questions about the role or company culture, and even conducting preliminary screening interviews. They're available 24/7, which is great for candidates who might be job hunting outside regular business hours.

3. Video interview analysis

Did you know that 58% of companies use AI for video interview analysis? Using AI in recruitment is a great idea. It can analyze speech patterns, facial expressions, and word choice.

This helps provide insights about candidates. However, keep in mind that this area is still evolving and raises some important questions about bias and privacy that companies need to navigate carefully.

4. Predictive analytics

McKinsey reports that predictive analytics is said to enhance talent matching by 67% and helps HR teams make more data-driven decisions about which candidates are likely to succeed in specific roles.

By analyzing patterns from successful employees, AI can identify traits and backgrounds that correlate with good performance and longevity at the company.

5. Online assessments

AI is moving beyond simply reading resumes. It's being used to assess candidate skills and aptitude through various methods such as online assessments. AI can power online tests that evaluate technical skills, cognitive abilities, and even personality traits. The results can provide a more objective measure of a candidate's suitability for the role.

6. Write job descriptions

AI is also being used to write better job descriptions, making them more inclusive and appealing to diverse candidates. It can suggest language that avoids unconscious bias and helps attract a wider pool of applicants.

7. Talent sourcing

Fed up sourcing the best talent? You can try using AI to scour professional networks and job boards to proactively identify potential candidates who might not have applied but could be a great fit. It's like having a recruiter who never sleeps, constantly keeping an eye out for talent.

8. Scheduling interviews

The technology can also help with scheduling interviews across multiple time zones and coordinating between busy calendars. It can also provide insights into market salary ranges to help HR teams make competitive offers.

What are some famous examples of AI bias?

AI bias in hiring is a serious problem that's playing out in courtrooms and workplaces around the world. Here are some well-known examples of AI biases arising in different circumstances:

Amazon and proxy discrimination

Proxy discrimination is when AI picks up on seemingly innocent factors like zip codes, schools, or hobbies that actually correlate with race, gender, or age. The system learns to discriminate without explicitly considering protected characteristics.

Amazon famously scrapped their AI recruiting tool in 2018 when they discovered it downgraded any resume containing words like "women's" - as in "women's chess club captain."

AI bias against race

In 2019, researchers found that an AI tool used in U.S. hospitals to predict which patients needed extra care favored white patients over black patients. Race wasn’t used directly, but the algorithm relied on healthcare cost history (a variable correlated with race due to systemic inequalities). Black patients with the same conditions often had lower healthcare costs, leading the AI to underestimate their needs.

After intervention, the bias was reduced by 80%, but the case is a clear warning: AI can discriminate even without intent, especially when flawed proxies go unchecked.

Age discrimination

In an EEOC lawsuit, a woman applying for a tutoring job only discovered the company had set an age cut-off after she re-applied with a different birth date. She literally had to lie about her age just to uncover the discrimination.

It's honestly pretty ironic - companies often bring in AI thinking they're solving their bias problem and making hiring more objective. Instead, they end up automating the same discrimination that was happening before, just at a much larger scale.

And because the legal system is always playing catch-up with new technology, there are people out there being discriminated against by algorithms without even knowing it's happening to them.

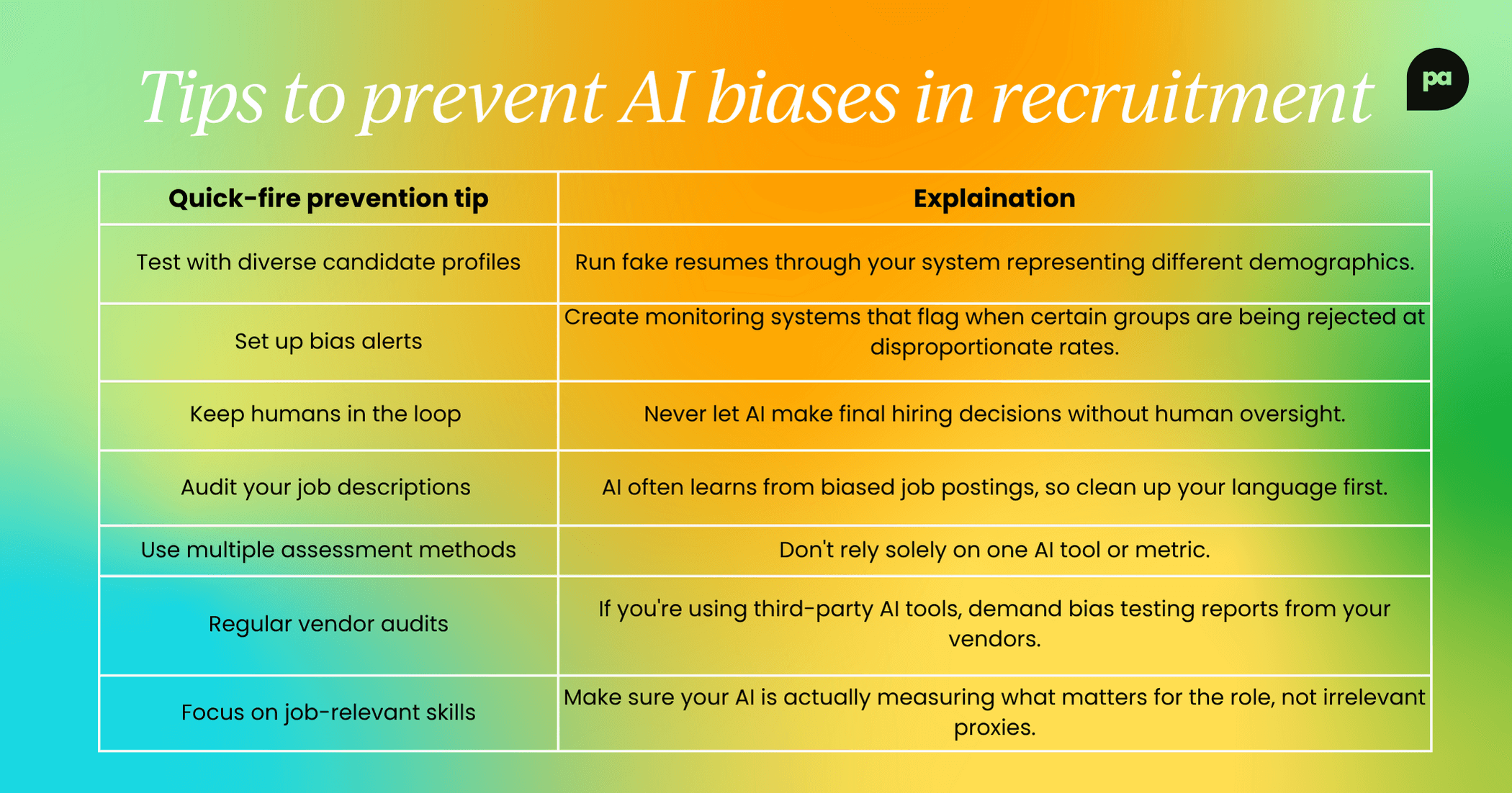

How to prevent AI bias in hiring

Preventing AI bias in hiring isn't an impossible mission, there are actually some pretty straightforward things companies can do. It just takes some effort and ongoing attention.

Here are some tips to help prevent AI bias in recruitment:

Fix your data first

If you're feeding your AI system hiring data from the past that's full of bias, you're basically teaching it to be discriminatory. It's like training someone by showing them only bad examples.

Companies really need to take a hard look at their historical data before letting AI learn from it. Some are getting creative and using synthetic data or pulling from more diverse sources to train their systems instead of relying on their own potentially problematic history.

Get different perspectives involved

Having diverse and inclusive teams involved in developing and deploying AI systems makes a huge difference. When you've got people from different backgrounds working on these tools, they're way more likely to catch biases that others might miss. It's like having multiple sets of eyes reviewing your work - someone's bound to spot the problem.

Keep checking your work

By auditing all processes, language, and behaviors, employers can minimize the chances of embedding bias in AI-powered workflows. You can't just set up an AI system and walk away. You need to regularly test whether it's treating different groups fairly. Bias has this sneaky way of creeping back in as the system processes more data over time.

Quick-fire prevention tips

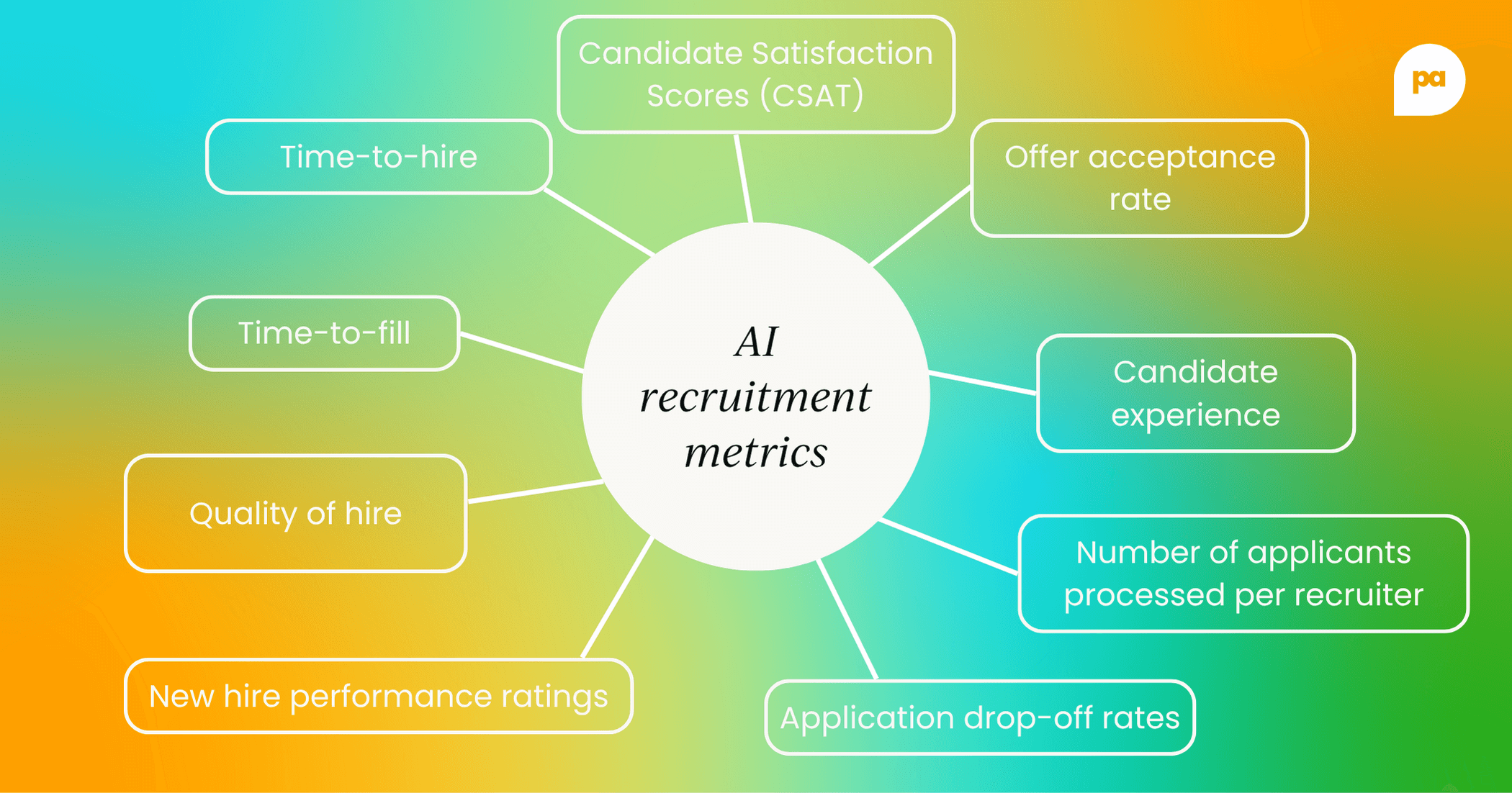

How to measure the success of AI in recruitment

It’s easy to get swept up in the promise of speed when adopting AI in recruitment. And while faster hiring definitely makes life easier for anyone in the recruitment space, speed alone isn’t proof of success.

So, how do you know if using AI in recruitment is actually paying off?

Well, it’s all about how you measure that success. You need to look at a combination of efficiency, quality, and equity. In other words: not just how quickly you hire, but who you hire, how they experience the process, and how well they perform once they’re in the role.

Some key performance indicators (KPIs) that work extremely well when it comes to measuring how effective AI is in the hiring process include:

Time-to-hire

Has using AI shortened the time it takes from a job opening being approved to a candidate accepting an offer? AI's ability to quickly screen resumes and identify top talent should significantly reduce this. A shorter time-to-hire means less time with open roles and faster productivity.

Time-to-fill

AI should meaningfully reduce the time it takes to move from job requisition to offer. If your tools are working, you should see fewer delays in job description creation, candidate sourcing, and interview coordination.

Quality of hire

Look at how new hires are performing over time. Are they hitting their goals? Getting promoted? Staying engaged?

Use 30-, 60-, and 90-day check-ins and performance data to gauge whether AI is surfacing better-fit candidates.

Offer acceptance rate

If your AI-powered process helps streamline communication and personalize candidate experiences, you should see an increase in the percentage of offers accepted.

Candidate experience

Don’t just assume automation equals satisfaction. Collect candidate feedback post-interview. Was the process clear, fair, and responsive? AI should never come at the cost of the human experience.

Number of applications processed per recruiter

If AI is handling the initial heavy lifting of screening, your recruiters should be able to manage a larger volume of applications without feeling overwhelmed.

New hire performance ratings

After new hires have been with the company for a few months, how do their performance reviews stack up? Are they meeting or exceeding expectations more often? This is a direct measure of how well AI is identifying high-performing individuals.

Candidate Satisfaction Scores (CSAT)

Implement short surveys at different stages of the application process. Are candidates finding the AI chatbot helpful? Is the application process clearer? Positive scores indicate a good experience.

Application drop-off rates

Are fewer candidates abandoning their applications mid-way? A streamlined, AI-assisted process should reduce friction and lead to lower drop-off rates.

Diversity metrics of applicant pool vs. hires

Are we seeing an increase in the representation of diverse candidates in our applicant pools and, more importantly, in our hires? AI should help broaden our reach and present a more diverse candidate slate.

Bias score reduction

Some advanced AI tools can even provide metrics on potential bias in language used in job descriptions or in the screening process. A reduction in these scores indicates progress.

By focusing on these KPIs, you can confidently demonstrate the immense value AI brings to your recruitment efforts, ensuring it's not just a technological upgrade, but a true strategic advantage for your organization's most valuable asset: its people.

Let’s keep the conversation going

Our events are designed for people leaders who want to think differently, ask the tough questions, and connect with others navigating the same changes in the world of work.

And yes, we've got a lot of upcoming events and sessions on leveraging AI in HR, what to avoid, how to do it right, and so much more.

Check out our upcoming events and come join the conversation. We’d love to see you there!

Follow us on LinkedIn

Follow us on LinkedIn